Project 2 Policies

This page outlines all the logistics of this project. All information about the project content is on the other page; this page does not contain any project content.

Overview

In this project, you will be provided with some cryptographic library functions. You will need to use those cryptographic library functions to design a secure file sharing system, which will allow users to log in, store files, and share files with other users, while in the presence of attackers. Then, you will implement your design by filling in 8 functions that users of your system can call to perform file operations.

This project is heavily design-oriented. The starter code we give you is very limited (it’s not much more than 8 function signatures), so you will need to think of a design from scratch that satisfies the functionality and security requirements.

For this project, you may either work alone, or in a team of two. We recommend working in teams of two, since it helps to talk through the challenging components of this project with a partner.

Grading Breakdown and Deadlines

Project 2 is worth a total of 150 points, broken down as follows:

| Task | Due | Points |

|---|---|---|

| Spec Quiz | Friday, September 27, 2024 | 5 |

| Design Doc Checkpoint | Tuesday, October 08, 2024 | 15 |

| Testing Checkpoint | Wednesday, October 23, 2024 | 5 |

| Final Design Doc | Friday, November 01, 2024 | 5 |

| Final Test Case Coverage | Friday, November 01, 2024 | 20 |

| Final Autograded Code | Friday, November 01, 2024 | 100 |

Checkpoint: Spec Quiz

Deliverable: To begin this assignment, please complete the Spec Quiz assignment.

The intent of the spec quiz is to help guide your understanding for the scope and requirements of this project. Understanding the spec is very important, as any mistakes in your understanding of what’s required will derail your design and make later fixes to your design/code extremely painful.

Checkpoint: Design Document

Deliverable: Please submit a design document to the Design Checkpoint assignment containing:

- At most 2 pages of text, describing your draft design (e.g. answering the “Design Questions” in green boxes throughout the spec)

- A diagram showing how you’re organizing the data that you’re storing. (The diagram is not part of the 2-page limit.)

Before writing any code, you will start by writing down the design that you intend to implement.

Your design document can include any details that you think are important, including, but not limited to: What data structures (i.e. struct definitions) will you be using? How will you organize the data you need to store? When a user calls a function, what steps will you take? What cryptographic functions will you be using, and what inputs will you provide to those functions (e.g. what key will you use)?

You can organize your document in any way that seems clear to you. If you’re having trouble organizing your design doc, note that we have several “Design Questions” in green boxes throughout the spec. One way to organize your design doc is by answering each of those design questions, one by one.

For the checkpoint, you will need to submit a design document with your draft design so far. Your design does not need to be perfect, but should have significant thought put into it. The more you’ve thought about your design for the checkpoint, the more feedback we can offer you before you start coding. Having a stronger design for the checkpoint will also reduce the amount of re-coding and re-designing you need to do while coding.

Your design document must be readable and typed. Please limit your design document to 2 pages of text with 11 pt font or greater. There is no minimum length, as long as you’ve given a complete overview of your design (e.g. answered all the design questions in the spec).

It’s natural to feel that 2 pages is too short to describe your whole design. Our suggestion is to keep a detailed design document for yourself, which can be as long as you’d like. Then, once you’re satisfied with your design, summarize the key points of your design into a 2-page document that you submit for the checkpoint.

Your design document must also include a diagram that visualizes how you’re organizing the data that you’re storing. The diagram can be on a third page (it doesn’t count against your 2-page limit). You can use any drawing tool (e.g. hand-drawn, Miro, Draw.io, Docs, Publisher, etc.) and the diagram can be in any format that seems clear to you. If you’re having trouble with your diagram, you could check out our suggested template (LaTeX, PDF, Google doc).

Checkpoint: Design Review

After you’ve submitted your design document, your project group should sign up for one 20-minute design review with a staff member. During this design review, you will present your design and receive feedback for it. Your design will be graded for correctness.

Design reviews tend to be more efficient in-person, but we’ll have some remote time slots if needed.

Deliverable: Sign up for a design review using the Signup Genius links on EdStem.

Policies for scheduling a time slot:

- You need to have a design document submitted by the time of your design review.

- Both partners must be present for design reviews. We reserve the right to take off points if both partners are not present for the design review.

- Please only sign up for a design review if you are ready. A design review is most useful to you if you are ready to explain your design and discuss improvements with a TA.

- Please check to make sure your time zone is correct when booking your design review.

- Do not sign up for multiple design reviews. We don’t have staff-hours to hold multiple reviews for one group, and we reserve the right to take off points if you sign up for multiple slots.

- Do not show up to a design review sick. Please cancel (see below for how to cancel) or email your TA to convert the design review to a remote one.

- However, if you request a remote design review due to sickness but your TA does not respond in time (and you do not receive a zoom link), you must cancel your slot (see below).

- You are allocated 20 minutes for your design review from when your appointment on Signup Genius starts. Unfortunately, if you show up late, we cannot grant any additional time.

- If you sign up for a slot and don’t show up, we reserve the right to take off points, and we can’t promise that we’ll have time to schedule another time slot for you.

Remote design reviews:

- We are offering remote design reviews this semester. If you need a remote design review, sign up with the design reviews that have REMOTE for the location (listed as

TA Name(REMOTE)). Design reviews without the remote tag must be held in person at the location specified in the calendar invite.

Cancelling/rescheduling a design review:

- To cancel an appointment slot, remove yourself from the Signup Genius slot. If you need to reschedule, please cancel at least 24 hours in advance. If you need to cancel within 24 hours, you should additionally make a private post on Ed, and email the staff member hosting your design review (copy cs161-staff@berkeley.edu) with subject “[CS161] Cancelling Design Review”, explaining why.

- If you sign up for a slot and

- cancel within 24 hours, or

- don’t show up (including due to time zone mixups), we reserve the right to take off points, and we can’t promise that we’ll have time to schedule another time slot for you.

Grading:

- Everybody starts out with a score of 15/15 points. We will remove points for inadequate designs. Designs missing parts of or entire sections will be docked points even if the rest of their design is adequate. Design reviews where the students are not familiar with their own design will be docked points even if the rest of their design on paper is adequate.

- Please do not resubmit your design checkpoint after the start of your design review. You will risk getting a 0 if so (as design checkpoints are graded during design reviews). <!– - The maximum score is capped at 15 points (no extra credit).

- Please do not resubmit your design checkpoint after the start of your design review. You will risk getting a 0 if so (as design checkpoints are graded during design reviews). –>

Checkpoint: Testing

Deliverable: Please submit your implementation, in the client_test.go file, to the Testing Checkpoint assignment. We recommend submitting by linking your GitHub account to Gradescope and selecting the relevant repository.

On submission to this assignment, the autograder will run the tests you wrote in client_test.go against the staff implemention of client.go. See more about the details of test coverage here. In our staff implementation, we’ve labeled each flag as one that tests for integrity or not. As a caveat, in order receive full credit, you’ll need to hit at least one flag that tests for integrity. Your score for the testing checkpoint will be solely dictated by the tests you write and nothing more (hence, it is possible to get a full score on the testing checkpoint without any code in client.go).

In order to receive credit on the testing checkpoint, you’ll need to write test cases that hit at least 5 flags. This means that hitting 4 non-integrity flags will garner you 4/5 on the testing checkpoint. Likewise for only hitting 5 non-integrity flags. Hitting 4 non-integrity flags and 1 integrity flag will get you full credit, and so will hitting 3 non-integrity flags and 2 integrity flags.

Not all flags are included in the testing checkpoint by design (we want to encourage students to write interesting edge cases). This does mean it is possible for your testing coverage score to increase on submission to the final Autograder assignment without writing more tests (congratulations if so).

The intent of the testing checkpoint is to ensure that you are on track for completion of the project. Many students find that writing tests prior to coding their client.go gives them a better understanding of the APIs. We do not recommend rushing into coding your client.go file until you have written a couple test cases at least and had your design review; remember that one of our security principles is to design security in from the start. Retrofitting a codebase that passes the basic tests to include security measures for your final autograder is not recommended–so please start this early!

Finding Flags

We know it is difficult writing a lot of test cases without knowing what the flags represent. However, in order to best simulate a real-world environment, course staff will not answer any questions about what the flag numbers on the autograder correspond to nor give hints as to what flags are. This is why we are encouraging you to start testing early via this checkpoint!

To write more robust test cases, we recommend going through the Design Questions listed throughout the spec to think through what possible security testing you could perform. Furthermore, each API lists out times a function should succeed and fail–these are great tests to write.

Test Writing

We recommend following our testing workflow when building out your tests.

Autograder: Implementation

Deliverable: Please submit your implementation, in the client.go file, to the Autograder assignment. We recommend submitting by linking your GitHub account to Gradescope and selecting the relevant repository.

Before the deadline, the autograder will only check that your code compiles, and run the same basic tests that were provided in the starter code. You will not be able to see your actual score for the project before the deadline.

After the deadline, we will run all the functionality and security tests on your design, and release your final autograder score. Almost all tests for this project are “hidden” and are not run until after the deadline. This is done to simulate a real-world security environment: attackers don’t tell you ahead of time what their attacks will be, so you have to think proactively to defend against potential attacks. We encourage you to write your own tests to check the functionality requirements and simulate the attacks that we’ll be running on your system.

We have many years of accumulated knowledge about attacks, while you only have one semester to think about the project. Therefore, it’s expected that your design will not score full points on the autograder, and we’ll account for this when computing final grades.

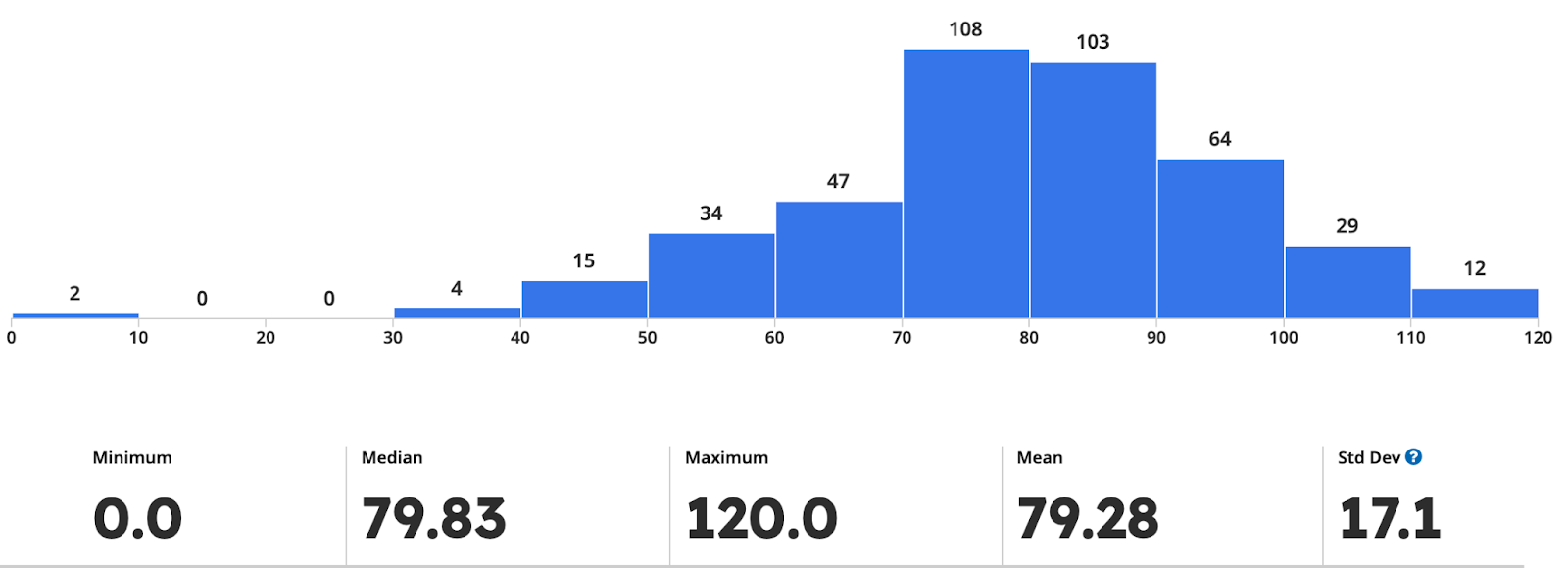

Below is an example of a Project 2 score distribution from a previous semester. It doesn’t look like our usual project distributions (where most people get full credit). Instead, the distribution looks more like a typical CS 161 exam curve. If you’d like, you can think of this assignment as a long take-home exam.

Each failed test (e.g. a functionality requirement that doesn’t work on your system, or an attack that your system doesn’t defend against), incurs a multiplicative penalty. For example, if every test has a 10% penalty, then each failed test multiples your total score by 0.9. In the example, failing one test results in a score of 90%, failing two tests results in a score of 81% (instead of 80% in the additive penalty model), failing three tests results in a score of 72.9% (instead of 70% in the additive penalty model), etc. We have lots of tests, and even a submission that scores relatively high will fail many of them. This grading system ensures that each subsequent failed test has less of an impact on your overall grade.

Your code cannot use any external libraries beyond the ones we’ve imported. Don’t try any adversarial behavior on the autograder (e.g. don’t try to DoS autograder resources). Attempts to circumvent the autograder will be considered academic dishonesty.

Autograder: Test Coverage

Deliverable: Please submit your implementation, in the client_test.go file, to the Autograder assignment. We recommend submitting by linking your GitHub account to Gradescope and selecting the relevant repository.

We’ve provided some basic tests in the starter code, but you will need to write the majority of tests yourself.

To compute your test coverage score, we will run your tests on the staff reference implementation of client.go. The staff implementation contains 34 specially-flagged lines of code that correspond to interesting behavior (e.g. we might flag a line of code that returns an error if the HMAC is incorrect). We check how many flagged lines of code are executed as a result of running your tests.

Each flagged line of code you get to run is worth 1 coverage flag point. To receive full credit for test case coverage, your tests need to cause 20 of the flagged lines of code to execute. We cannot answer any questions about what the flag numbers on the autograder correspond to. It’s okay if you don’t score all 20 points here; it’s more important to make sure that your own design is thoroughly tested.

You may check your test case coverage by submitting to the autograder on Gradescope at any time before the project deadline. The coverage score you see on Gradescope is your final coverage score for the project (there are no hidden coverage flag points).

Note that getting a full score on the coverage flags does not guarantee anything about your own implementation. It can be a good indicator that you’ve written thorough tests, but there is no guarantee that the tests you’ve written are the same as the hidden tests we’ll be running on your own implementation.

We reserve the right to re-run the autograder on all submissions before releasing final scores. Therefore, if your code coverage score is non-deterministic, we cannot promise that the score will stay the same after re-running the autograder.

Final Design Document

Deliverable: Please submit an updated version of your design document to the Final Design Doc assignment.

This document should reflect the final design you’ve implemented in code. If you made modifications after the checkpoint, you can modify your checkpoint design document. If you didn’t update your design, it’s also okay to re-submit the same document you did for the checkpoint.

We’ll grade your final design document on completion and effort, not correctness. The correctness of your final design is checked by running the autograder on your code.